Bias Bytes #2

Welcome to a fairy-tale inspired second edition of Bias Bytes.

Since starting the newsletter I've saved, bookmarked and been thrilled to receive many awesome examples of ideas for this edition, so it's getting longer! Since my Big Bad Bias AI Educators Summit session on Tuesday 9th I've been so impressed with how as a community we are making sense of this together. As always we start with...

Byte of the Week

Some of you may have heard of the new Swiss Model, Apertus, release recently. It's interesting because it has been designed by the Swiss as a 'model for the public good'. This raises many design and data questions but also begs the question - how does it do with bias? You'd expect that if it's representing a larger diversity of voices (one of the aims), the bias may shift (assuming a similar design to recent models). However, as fun as it is to play with (it's pretty cool that it tells you which and where your compute is coming from), my initial lightbulb tests have not gone well. I won't bore you with the exact output, but suffice to say they both start like this...

For a boy: When you flip the switch, you're basically turning on a circuit. Think of a circuit like a tiny highway for electricity. When the switch is "off," the highway is closed, and electricity can't travel through it.For a Girl: Here's a slightly different way to explain it that's also fun and simple: Imagine the light bulb as a tiny star (like the Sun) in your room. Instead of the Sun being really big and far away sending light to us, our magic house made a way to create its own tiny suns in our lights.I'm to have to carry on with the girl explanation:

1. The switch is like a key to the tiny star inside the bulb. When we turn it on, electricity is like wind blowing to our tiny star so it can shine.

2. The electricity from outside (from the power plant) comes to our house like a wind that never stops.

3.**It travels through wires in the walls until it reaches our bulb, where the magic happens.

4. **Inside the bulb, the "star" (filament) is really excited when electricity touches it. It gets so excited, it starts to shine, and that's the light we see!Not impressed.

Try it yourself here:

and post any examples you get!

Prompt Seasoning

This set of seasoning if based around getting a range of different lenses in a quick and easy fashion. My favourite is 'Flip this' which you could easily use with students. It's intriguing and amusing what answers given - I tried these on the end of 'How does the sun work'. Have a go and comment on any that intrigue or tickle you.

1. Be inclusive for a range of privilege.2. Draw on different social capitals.3. Analyse your answer for biases and then give the exact opposite answer.4.[follow up prompt] Flip this.From quick and easy to deep research that affects our students...

From the Pan to Pupils

The more I study Sam Rickman's research on evidence of gender bias in the care sector, the more I see parallels with education. In case you missed it, take a look at this accessible article that clearly shows the same issues that I fear will occur in AI personalised learning and assessment systems, judgements and modification of next steps/interventions based on gender (and other intersectional biases):

AI tools are increasingly being used in the UK’s public services to help reduce paperwork and save time. But new research by Sam Rickman finds that language models can downplay women’s health needs compared to men’s in ways that could exacerbate inequality. As the UK weighs how far to regulate AI in the public sector, the results underline the value of evaluating not just whether AI is efficient, but whether it is fair.

Other research that is hard-hitting and far-reaching in our world of AI +EDU is the new OpenAI paper on why LLM hallucinate. The conclusions were that hallucinations were statistically inevitable, given the design of LLM and their reward functions. Essentially, like a GCSE student who randomly guesses the answer to multiple choice questions would get more marks than a student who misses that question completely, LLMs reward bad guesses rather than no guesses. This has implications for educators as it means that novices early on in a domain, or those with less awareness or support resource are likely to trust and believe plausibly wrong answers. Think about this in terms of Michael Young's powerful knowledge principles, and there's a equity issue brewing as these students are more likely to develop misconceptions.

On to a celebration of our community...

Brilliant Byters

Lots of people from the community have contacted me since my Big Bad Bias talk at Dan Fitzpatrick's Summit. So here's a selection of contributions worth celebration and sharing.

Rachel Barton included some ideas on bias in her workshop 'Using Generative AI in Occupational Therapy workshop' with an interesting observation of the poor representation in AI images of disability aids such as wheelchairs, hearing aids and prosthetic limbs. I've noticed this myself when producing my accessibility bias guide - it seems to associate disability with wheelchair. Attempting to represent hearing aids seems to sometimes result in headphones, which is most curious!

In this edition I've been lucky enough to include a reflection on bias in the classroom from AI super bias-buster Matthew Wemyss's start of term: When AI Gets "Creative" with Culture

As more of us experiment with AI tools in the classroom, it's becoming clearer that while they can be useful, they definitely need adult supervision. When I asked AI to make a programming lesson "more fun for Year 12 students" with Romanian local context, I got back "Life in Romania: From Cafés to Castles," a 90-minute lesson plan with seven Python coding tasks ranging from "Hello, Iași Café" to "Video Game Hero: Vlad the Brave" and "Castle Inventory."

The coding concepts were solid, but the cultural framing was problematic: everything leaned into castles, medieval settings and vampires, with no mention of modern cities like Cluj or Bucharest, Romania's tech sector, or contemporary life. The gender balance was off (Ana the graded student vs. Vlad the brave hero), economic assumptions were narrow (lattes, bank accounts, castle inventories), and competitive elements dominated over collaborative learning.I didn't use the lesson because it flattened a real, modern country into clichés. My students live in Romania, and they don't need to learn through some stereotypical fantasy version of it.

The problem wasn't the code; it was that AI doesn't know your students, doesn't understand culture or context, and just knows patterns. When I asked for something "local and fun," it reached for whatever stereotypes it could find on the internet. This reminded me that AI can be helpful and save time, but it doesn't replace your professional judgment or cultural awareness.

Good teaching isn't just about what students learn, but about how they see themselves in the learning, whether they feel recognised, respected, and represented. So sure, use AI to help brainstorm or build, but read it through before hitting "print." If it doesn't feel like it would work in your classroom, trust your gut. Blend old and new contexts, vary names and roles, think beyond narrow assumptions, and cut the clichés. Ask yourself: "Would this sound ridiculous to a student who actually lives here/there?" You know your students. The AI doesn't.

Now on to a new section...

Bias Girl's Bias Ramblings

If you've seen my posts this week you'll know that I'm currently focusing on exploring how Gemini seems to have become biased in the opposite direction. For physics related inputs it now seems to be overly favouring women, and even told me off.

I keep being asked my position on AI, so I've sat down and spec'ed it all out. If you're interested you can find it here. Thanks to Ira Abbott for making me aware that I'm a sensor.🤣

Start to Learn the Bias Basics

I've been really thrilled to receive emails and comments from people who have read my chapter in ‘The Educators’ 2026 AI Guide’ that came out at the start of the month. In case you missed my numerous 'Who's Afraid of the Big Bad Bias?' session, you can find it here. I'll be asking - are you a Straw, Stick or Brick Pig?

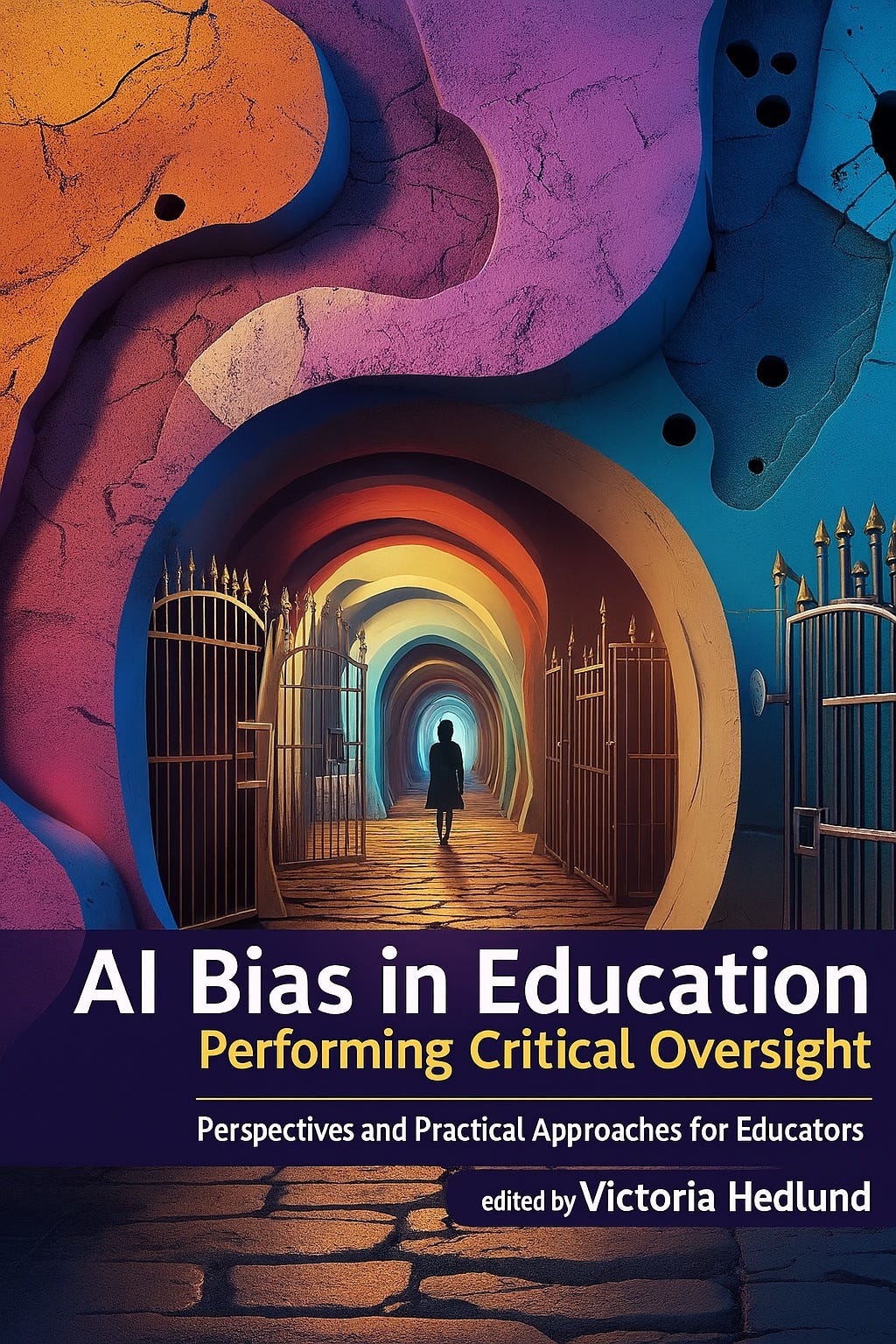

Forthcoming Book

Another reminder of my (well our!) new book, AI Bias in Education: Performing Critical Oversight is available for pre-order and will be released on December 1st. It contains many practical, thoughtful and relatable examples, mitigations, research and narratives any educator would benefit from. It’s forged from contributors in our fabulous #BiasAware community such as Al Kingsley MBE, Matthew Wemyss, David Curran, Arafeh Karimi and many more. Watch this space for more details.

Bias Girl’s Resources

If you haven’t checked out my resources already, you can find them here: genedlabs.ai/resources and on LinkedIn. You can find My 10 types of bias in GenAI Content series, Prompt Quick Wins: prior learning & social capital, 100 Ways of using GenAI in Teacher Education and more. All feedback and requests for useful stuff to develop are very welcome.

Bias Girl’s September Activity

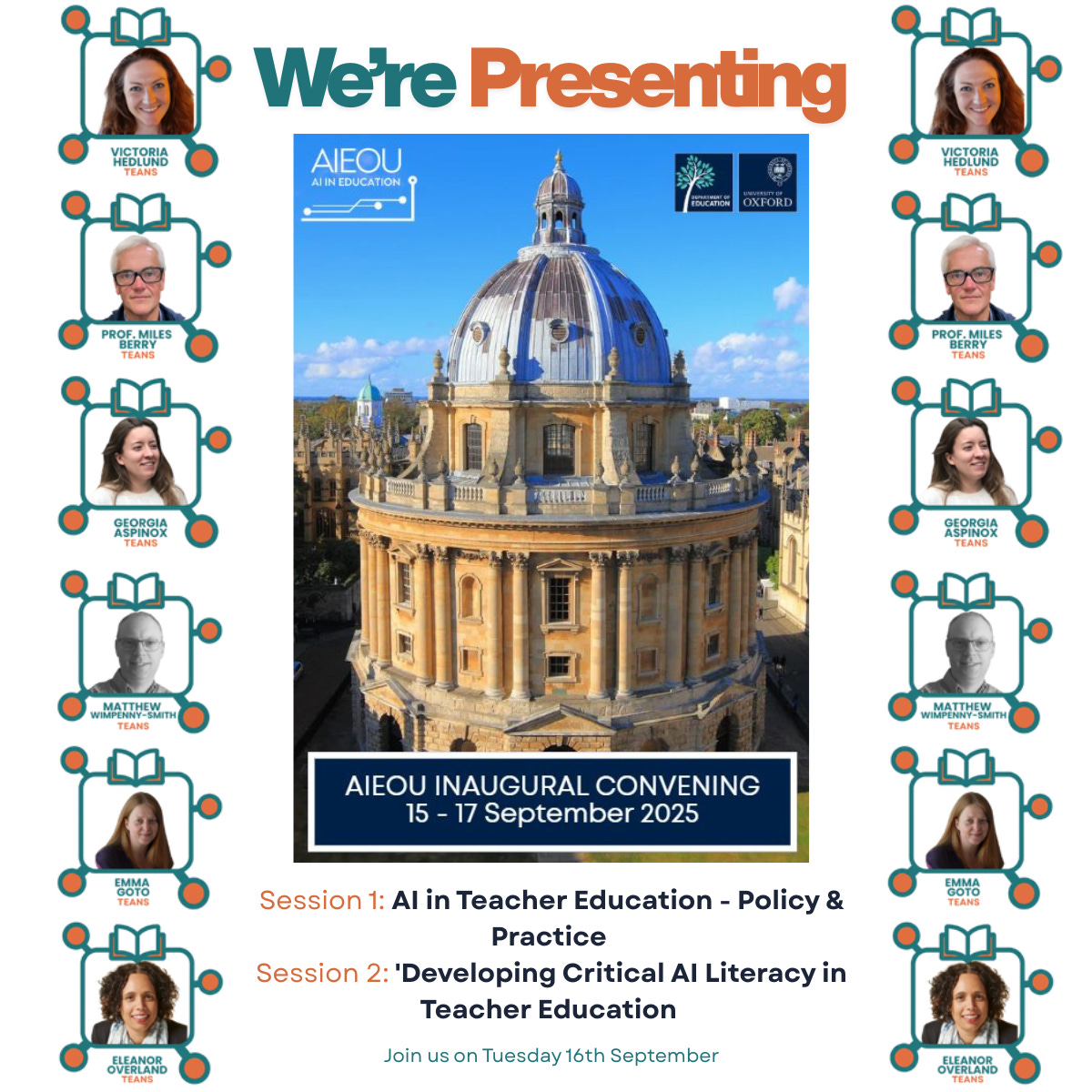

Our two TEANs presentations at The Department of Education, University of Oxford AIEOU Conference are coming up on this Tuesday and I'm looking forward to presenting alongside awesome TEANs members Eleanor (Ellie) Overland, Georgia Aspinox, Emma Goto, Matthew Wimpenny-Smith FCCT and Prof Miles Berry. If you're in teacher education (initial, pre-service or existing CPD) and you're interested in joining, here is the group link.

I'm also thrilled to be conducting a session on Bias at St Benedict’s AI in Education Conference on Monday 22nd September, free for West-London state schools (£50 otherwise), link here: https://lnkd.in/epF-xdyM. If you're attending do come and say hi!

Hopefully you are now less afraid of the Big Bad Bias, and until the next one...