Bias Bytes #4

This edition looks at the importance of us, as a community, pedagogically stress-testing Edtech tools and aligning them to our teacher identities and values. I use the recent prompt examples released

Byte of the Week

If you know my work from the beginning of the year, you’ll know I have a bit of a history of being less than impressed by the advice for educators that comes out of OpenAI. So last week when they released the ‘Chats for High School Teachers, a collection of practical examples of how teachers are using ChatGPT every day’ series, I jumped with joy as I knew that would be my content sorted for the next year. Cynicism aside, I realise they are trying to streamline the workflow of teachers, but I’m aiming to demonstrate to you why generalising in prompts is a bad idea, in terms of equity, through using the very first example given. Here it is:

Looks harmless enough, right? Wrong. Here’s Sam cutting to the heart of it:

, I cheated with Sora2 there! What’s my issue here, then? Looking at that prompt, it includes the words ‘clear’, ‘concise’ and ‘format’. You could think of these as ‘trigger words’ for LLMs. Their inclusion means the model will adjust in certain ways. I asked ChatGPT to explain for us:

When you include “clear” I am likely to infer that you want the output to be easily understood, streamlined, and free from ambiguity — using plain language, direct instructions, and logical structure. When you do this, I am likely to simplify sentence structures, remove complex vocabulary or idiomatic phrasing, reorder content for linear logic, and omit nuance or culturally specific references that could be seen as confusing. The equity impacts of these assumptions could be that this version of “clarity” centers dominant linguistic and cultural norms, potentially silencing alternative expressions of knowledge or voice. Learners who benefit from layered explanation, cultural context, or non-linear reasoning (such as EAL or neurodivergent pupils) may find “clarified” text less meaningful or relatable.

Similarly for ‘concise’:

When you include “concise”, I am likely to infer that you want the output to be shorter, more efficient, and stripped of anything that appears redundant. When you do this, I am likely to condense paragraphs, remove repetition, shorten explanations, and compress examples into bullet points or summaries — often prioritizing brevity over elaboration or narrative flow. The equity impacts of these assumptions could be that the text becomes less accessible to learners who need repetition, redundancy, or explicit scaffolding. Reducing contextual language may disadvantage EAL learners, students with working memory challenges, or those who require extended explanation to construct meaning.

And lastly for ‘format’:

When you include “format”, I am likely to infer that you want the output to be visually organized, consistent, and presentation-ready, designed to match a perceived professional or institutional style. When you do this, I am likely to add headings, bullet points, icons, spacing, and stylistic emphasis (bold or italics); standardize structure for screen readability; and minimize unconventional layouts or expressive variation. The equity impacts of these assumptions could be that standardized formatting privileges learners who process information visually and are fluent with institutional conventions. It may exclude those with visual impairments, dyslexia, or who interpret meaning through oral, spatial, or multimodal channels. By over-prioritizing visual uniformity, such formatting can unintentionally reduce cultural and expressive diversity in communication.

So, I’m encouraging educators to recognise that every word in a prompt carries pedagogical weight. It shapes the model’s assumptions and, ultimately, how inclusive or exclusive their teaching materials become for diverse learners. If you haven’t seen my short on assumptions you can watch it here.

So what would be a better prompt to use? That’s the focus of my...

Prompt Seasoning

Here are some variations on the OpenAI-advised prompt that you could use instead. I’ve included some optional extras to demonstrate how to set a context in detail. Sam Canning-Kaplan take note. As they are pretty long, I’ve kept it to 3 for this edition:

1. Rewrite this assignment so it makes sense to a wide range of students with different reading experiences, cultural perspectives, and ways of learning. Keep the language purposeful and the examples meaningful for everyone. [Optional Context] When I say rewrite, I mean reshape the structure and phrasing so the intent stays but the access widens. Something that makes sense should speak across learner contexts and not only within one dominant way of communicating. Purposeful and meaningful examples connect to the real lives and experiences, such as [examples from your range of learners]. 2. Edit this piece so it is easy to follow, but keep the explanations and examples that help students connect ideas. Aim for balance that reads easily yet still supports deep thinking for a range of learners. [Optional Context] Here edit means refining and not reducing. It means keeping the thinking intact while improving the flow. Connection happens when new ideas link to what students already know (lived experience). 3. Revise this assignment brief so that it connects with a diverse classroom of learners. Include multiple entry points for engagement and reflection linked to lived experiences and social capital, and explain how your changes make the task more equitable and meaningful. [Optional Context] To revise is to think again about purpose, audience, and impact. Connection means relevance, where the content feels real to the students who will use it [add details]. Engagement comes when curiosity is individually sparked and students feel ownership of the task. Reflection invites learners to notice how learning feels and what it changes. An equitable design keeps every student able to join the conversation.Before I start tearing the other prompt advice apart here’s...

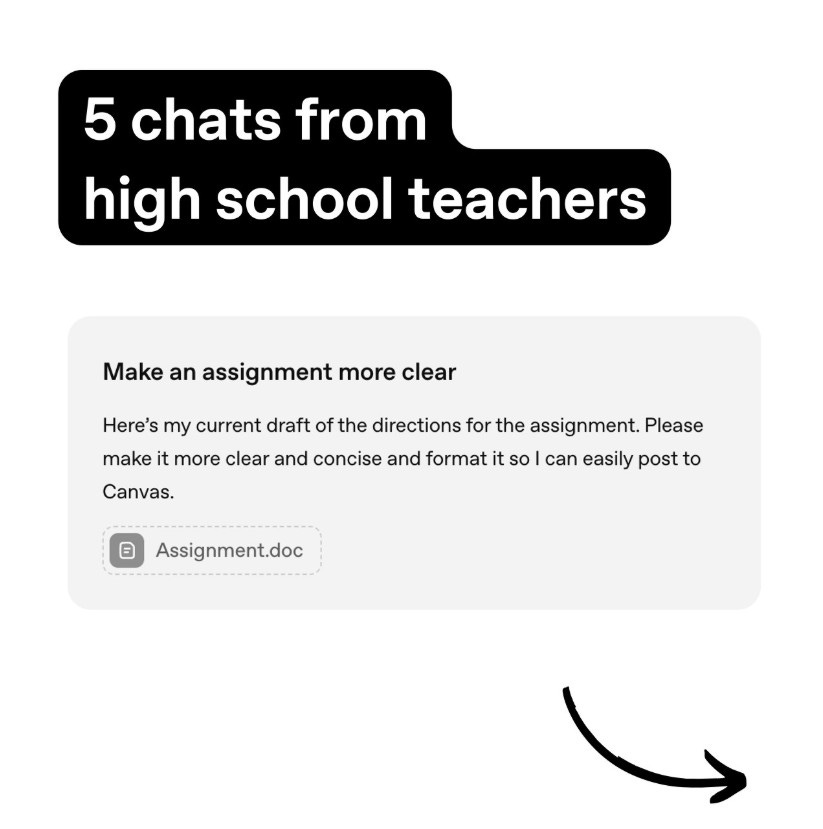

From the Pan to Pupils

The research focus for this week is more along the lines of understanding how we can judge LLMs. I found this piece by Sebastian Raschka, PhD really helpful, as he explains that there isn’t one single way to judge how good an AI model really is. Researchers use four main approaches: quick quiz-style tests, grading by another AI, asking people which answer they prefer, and having an AI mark work using a checklist. Each has strengths and flaws with some being fast but shallow and others more realistic but harder to scale. The article goes into some technical depth and comparison, you can find it here.

On to a celebration of our community...

Brilliant Byters

Following on from last edition, here is the second part of Bias mitigation tips from Hannah Widdison and the Ealing Learning Partnership, especially timely as she mentions the importance of stress-testing:

Following on from my appearance on Alex Gray‘s Podcast last week, I was thrilled to see Katia Reed‘s post on how aspects of our discussion had been operationalised in the classroom in terms of fabulous student projects on bias and hallucinations that produced little lego films. It’s so rewarding and awesome when you see your words being translated into the lives of pupils. 😊

(By the way the next Podcast guest is Al Kingsley MBE, always fabulously brilliant to listen to both on this Podcast and on his appearance on Renee Langevin, Shaun Langevin and Michael Berry‘s explAInED Podcast)

Other community members who have flown with ideas this week are Miha Cirman who has started the conversation of how different tools represent teachers at both primary and secondary level - continuing the conversation on if certain chatbots associate teaching with specific genders when asked to create an image of them. Tom Millinchip has also written an excellent piece on bias here on substack. It meshes well with my bias is like a cake video:

Moving on to exploring pedagogical red-teaming...

The PedRed Team

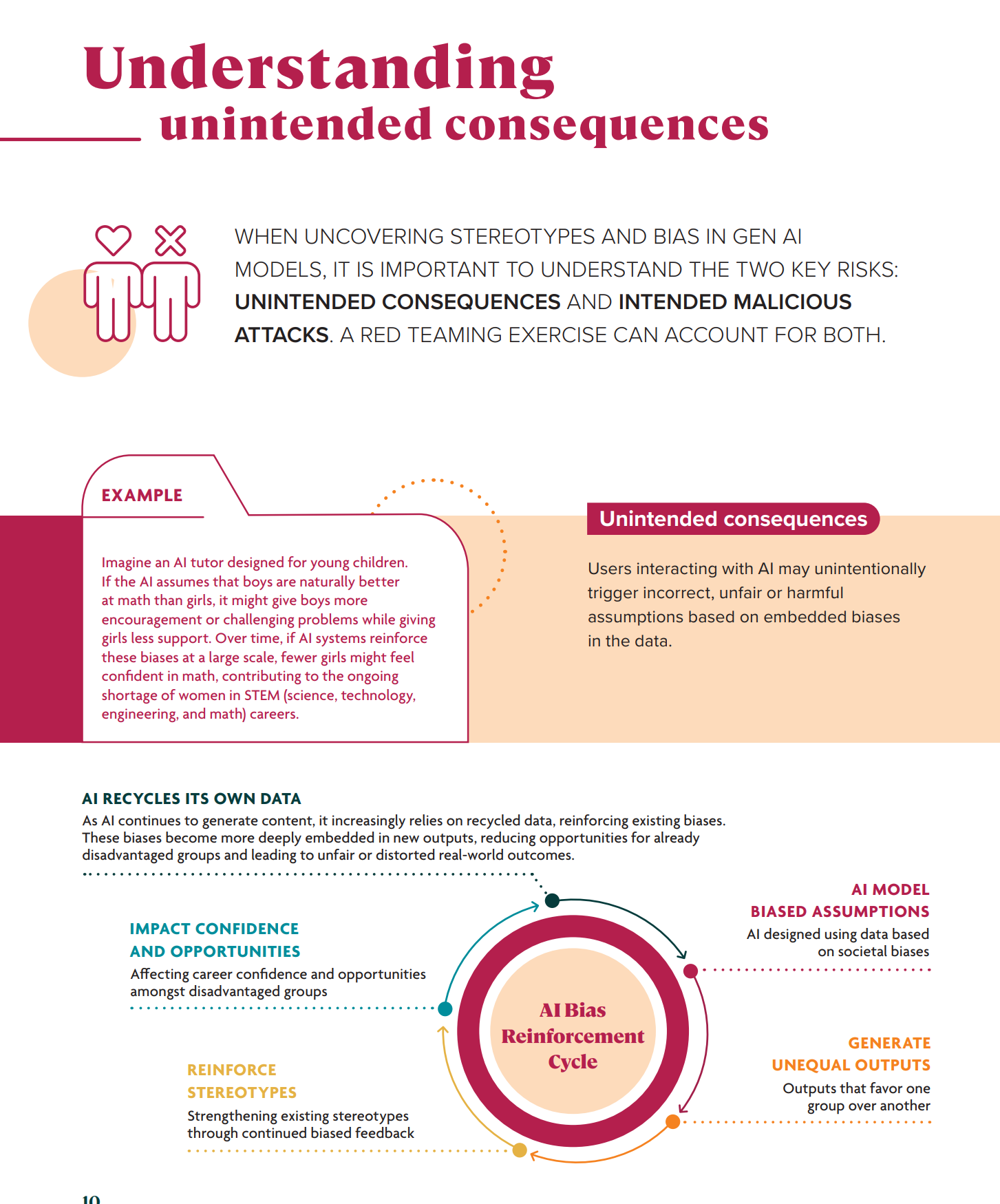

Born out of an idea from IOANNIS ANAPLIOTIS, Annelise Dixon, Alfina Jackson and Simone Hirsch, last saturday I set up the PedRed Team as a Linkedin group. If you don’t know what red-teaming is then it’s a term borrowed from tech where we stress test (basically try to break) AI tools, here in terms of pedagogical uses. Anyone can join our group, you don’t need any coding or technical skill, you can just use a chatbot and analyse the results (such as Andrew Kaiser‘s fab analysis) . We also have people who are more technical and have automated testing for a different type of analysis (thanks Ira Abbott). You don’t need to commit and can dip in and contribute as you wish. The first task is on there: Gender Bias and EHCP - we are looking at if chatbots provide different EHCP advice based on gender. I was thrilled that this was acknowledged by Fengchun Miao. You can read more about red teaming from the UNESCO guide here, featuring Humane Intelligence . The more of you that test, the more we can learn about navigating this realm equitability. Here’s an excellent extract from it on bias (thanks to authors Dr. Rumman Chowdhury, Theodora Skeadas, Dhanya L., Sarah A.) :

Bias Girl’s Activity

I was thrilled to last week be named as one of the Top 12 Voices in AI to follow in Europe by LinkedIn News Europe. I have already had conversations with some of the fabulous others in the list. Check out their content here: Nadine Soyez, Anna York, Adam Biddlecombe, Harriet Meyer, Alex Banks, Benjamin Laker, Jacques Foul, Jonathan Parsons, Matic Pogladic, Tey Bannerman and Toju Duke. I am intensely proud to be on this list, thanks to Edson Caldas for the #AIinWork feature.

I’ve been busy with a few things this month. First I was a judge for the BRILLIANT Festival alongside Joe Arday MBCS FCCT FRSA, Athar M., Martyn Collins, Kylie Reid and more. This was such a fabulous experience to see the quality of submissions and positivity in helping our youth access STEAM careers and interests. I’ll look forward to seeing the winners at the festival on 11th November.

Second, I am putting together the finishing touches for our GenAI in Teacher Education Summit 2025, details below (the date and time has moved and it is now online). Tickets are free (click the image). We are lucky enough to hear from Prof Miles Berry, Ben Davies, Georgia Aspinox, Matthew Wimpenny-Smith FCCT, Pip Sanderson, Eleanor (Ellie) Overland and David Curran 🟠.

Third, I have created a new initiative to create a free, low-stakes experimental sandbox for teachers to experiment with some of the very best advice our AI + Edu community can offer. It’s called the ‘Teachers’ AI Playground’. Our first session will be with Matthew Wemyss on 26th November, 4-5pm (GMT). Tickets and details out soon. I’d like others in our community to have the opportunity to facilitate these sessions too, so if you are interested in bringing an idea, prompt, chatbot or whatnot and facilitating users through using it and looking at output, drop me a line.

Bias Girl’s Resources

If you haven’t checked out my resources already, you can find them here: genedlabs.ai/resources and on LinkedIn.

Remember, through all of your prompting...

Stay #BiasAware :)

➡️ Don’t forget you can view my work on Linkedin!

Thanks for the mention as always Victoria! I am thoroughly enjoying the Bias Bytes - such helpful information. I think I may be becoming the bias girl of the explAInED crew!